Freethink Research is a collaboration with our research team at Nightview Capital. Subscribe to their weekly newsletter, The Nightcrawler, in the form below. Follow Eric and Cam on X.

Error: Contact form not found.

Data centers may not sound exciting, but they’ve become the critical backbone of AI’s explosive growth in the last few years.

AI relies on immense data processing power, placing enormous demands on data centers to be both powerful — and efficient. As AI continues to advance and grow, these centers are pulling tons of electricity, creating huge pressure (and incentives) to become more energy-efficient.

This edition of Freethink Research explores the evolution of data center efficiency, focusing on the relationship between data, computational needs, and energy efficiency in the AI era. We explore the rising need for computational power from AI and cloud computing, and we also examine how leading cloud providers like AWS, Google, and Microsoft are making their centers more efficient with better coding and expansion strategies.

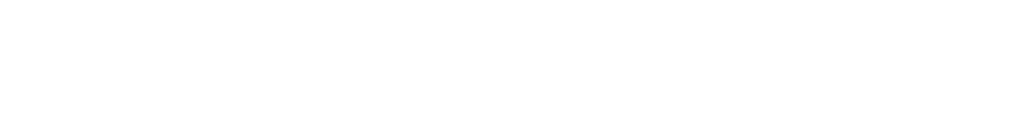

By 2026, data centers will consume twice as much power as France

Sources: IEA, Dallas Fed, Statista

According to estimates from the International Energy Agency (IEA), the rapid growth of AI and traditional data centers is poised to significantly increase global energy demands. By 2026, the electricity required to support these facilities is going to be massive — roughly double what huge economies like France or Texas consume in a year.

This comparison highlights the scale of energy usage driven by the expanding AI sector and its infrastructure. Texas, known for its large industrial base and growing population, already consumes more electricity than any other state, surpassing the energy needs of many entire countries. The fact that data centers alone could soon require this level of power underscores the immense strain the technology sector is placing on global energy resources, emphasizing the need for more sustainable energy solutions to keep up with the surging demands of the digital economy.

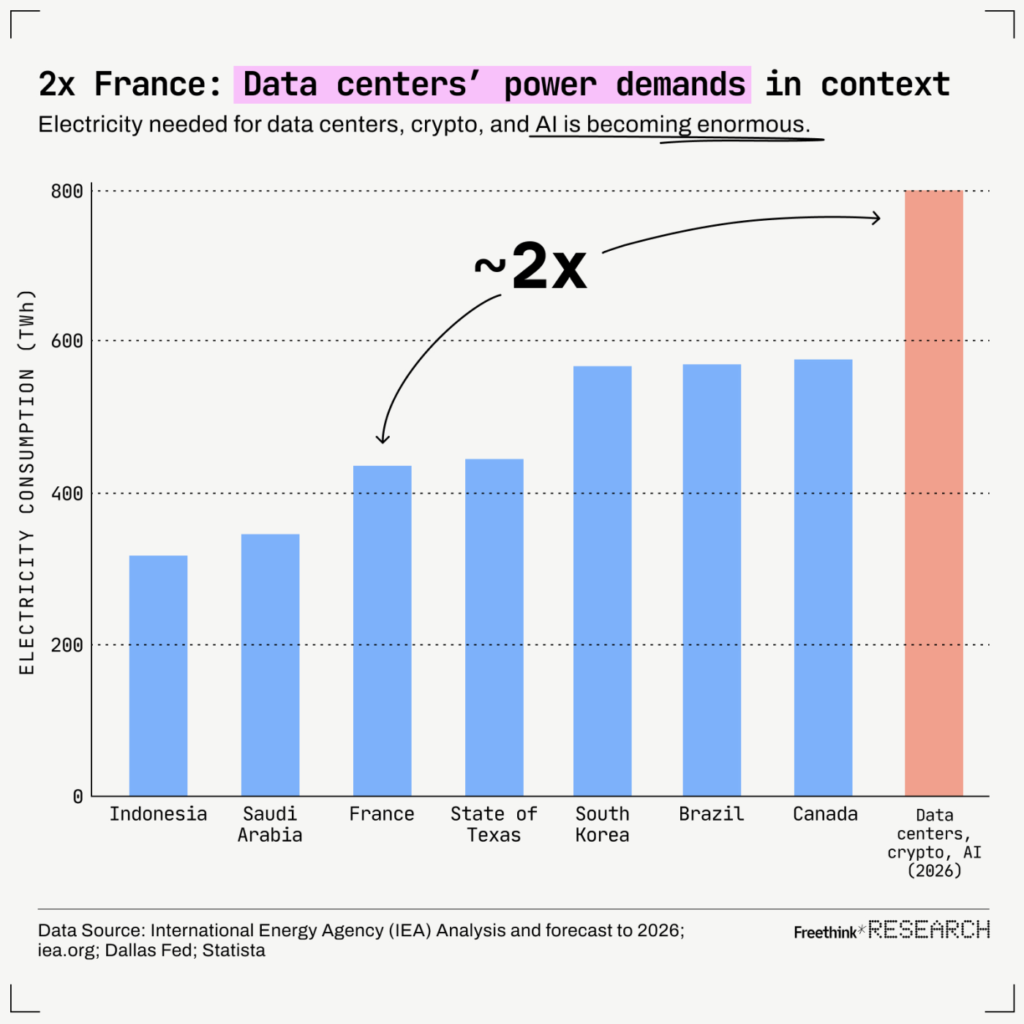

Average data center efficiency hasn’t improved in a decade

Source: Statista

Over the last 20 years, data centers have become much more efficient. A key measure of this is Power Usage Effectiveness (PUE), which shows how much energy is used for computing versus other things like cooling.

A PUE of 1 is perfect — it means all the energy goes to computing, while a PUE of 2 means half is used for cooling and other tasks. In 2007, the average PUE was 2.5, meaning data centers had to waste a lot of energy. But with better technology and cooling systems, the average PUE dropped to 1.6 by 2013, a big improvement in efficiency, where it has stayed ever since.

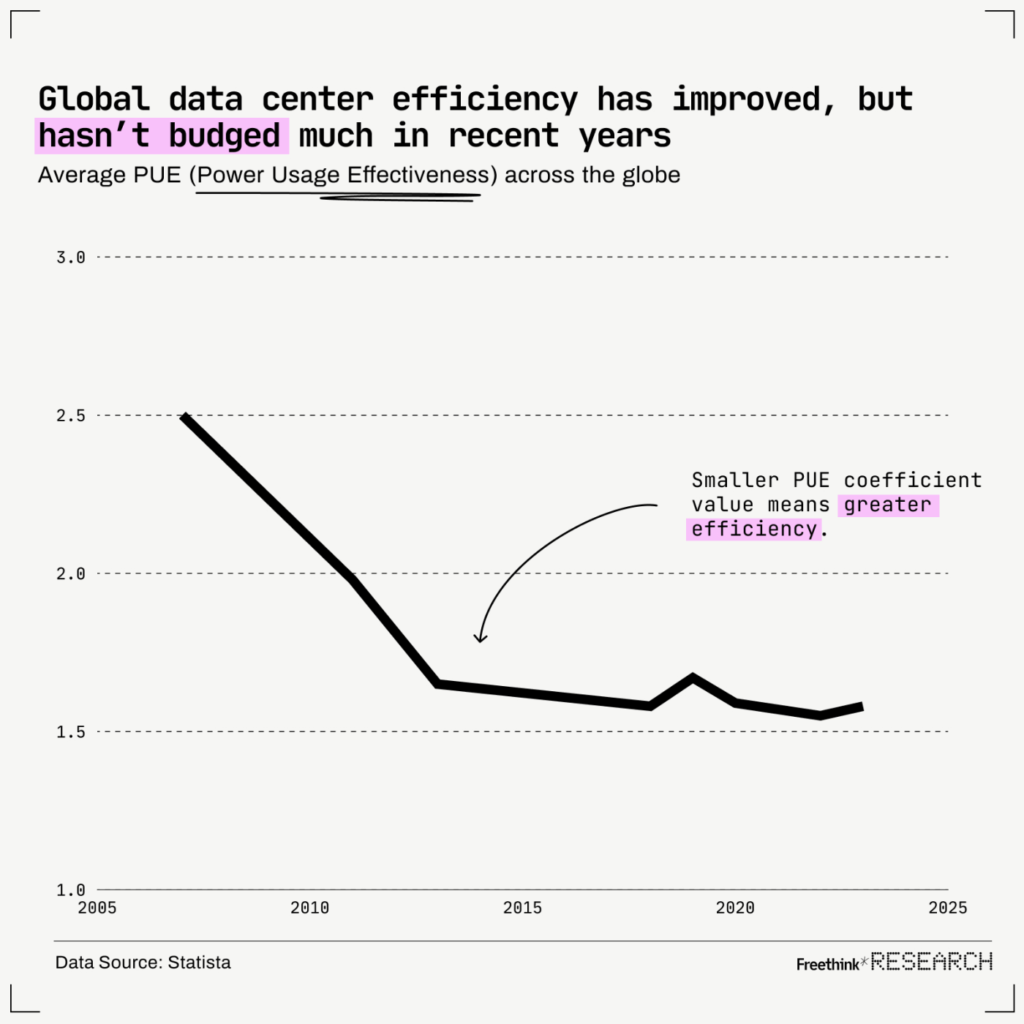

Hyperscale cloud data centers are becoming incredibly efficient

Source: Nutanix

When we look at big cloud providers like Amazon Web Services (AWS), Google Cloud, and Microsoft Azure, it’s clear they’ve unlocked big improvements in energy efficiency. By May 2023, their PUE values were 1.2 or lower, meaning the vast majority of their energy goes to computing, not cooling. These giant data centers use their size and advanced technology to be more efficient, which helps them save money and outcompete smaller scale operations. As AI grows, these kinds of efficiency gains will be even more important for handling the increasing demand for data processing.

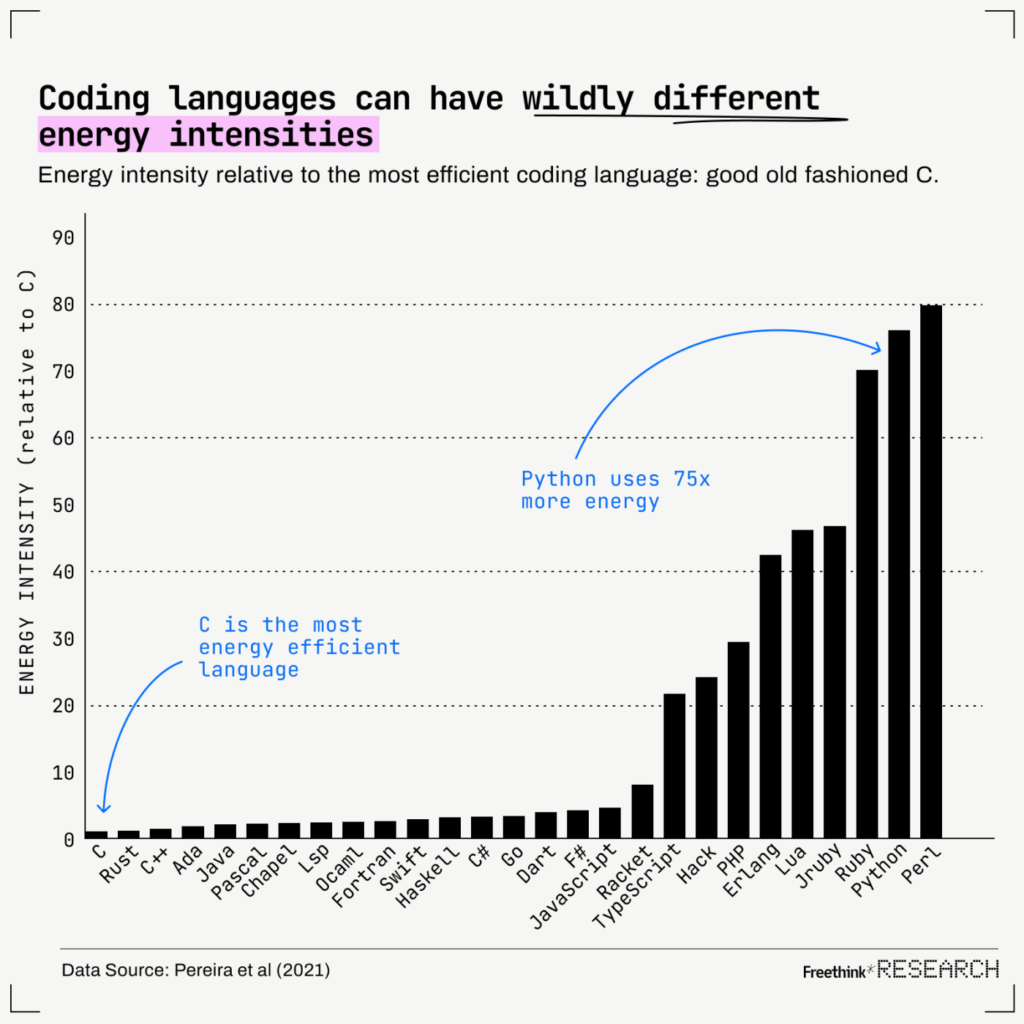

The world’s most popular coding languages vary enormously in energy intensity

Source: Pereira et al (2021)

The software used in data centers affects how much energy they use. A 2021 study found that Python, a popular and easy-to-use coding language, is not very energy efficient compared to older languages like Java and C.

This matters because Python is used a lot in apps like Spotify and AI systems. On the other hand, older languages like Java are more efficient, using less energy to do the same tasks. This suggests that newer languages like Python may need to be optimized to match the energy efficiency of older code.

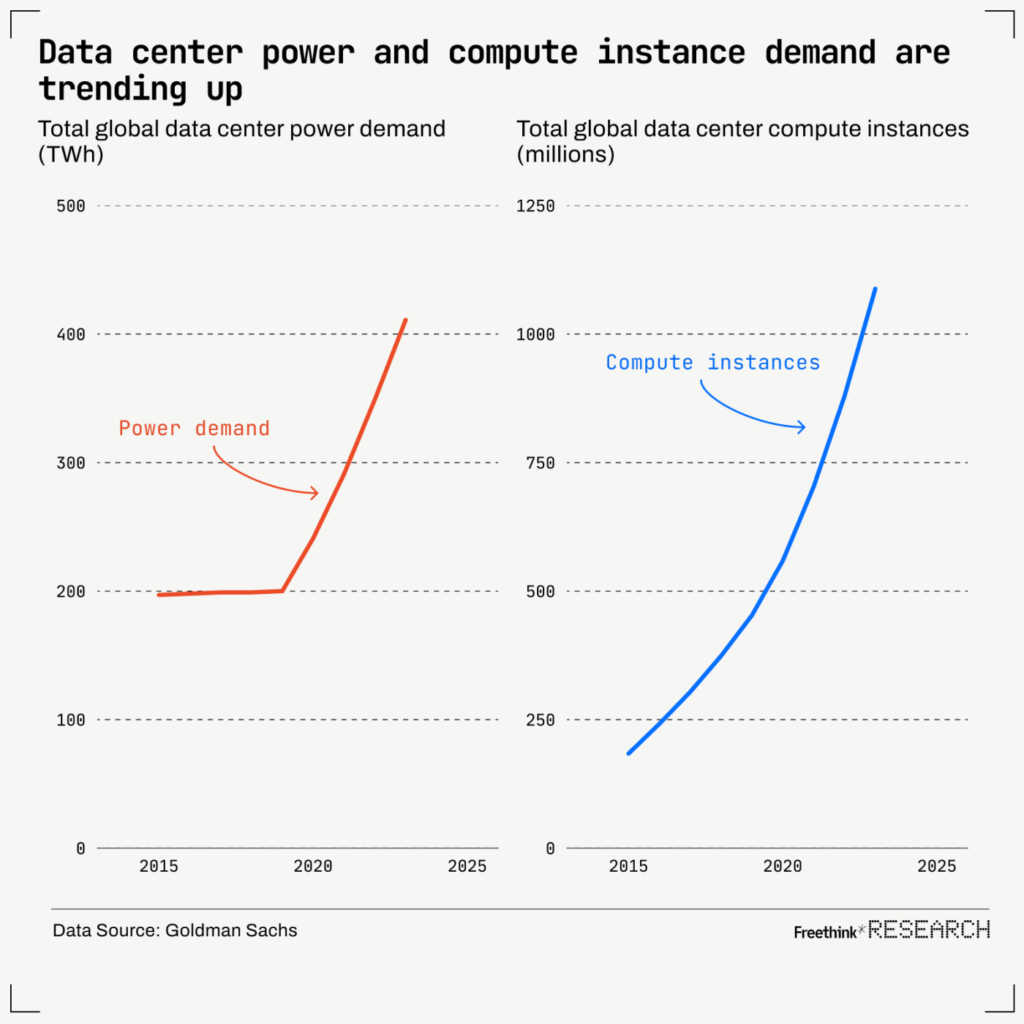

Demands for power and compute instance are soaring

Source: Goldman Sachs

Since 2019, data center power usage has roughly doubled, while the number of compute instances also doubled. This is mainly due to the growth of cloud computing, data-heavy apps, and artificial intelligence (AI). While there were efficiency gains between 2015 and 2019 — when compute instances grew but power demand was flat — the digital surge from the COVID-19 pandemic and the rise of AI apps like ChatGPT has pushed up demand for computing.

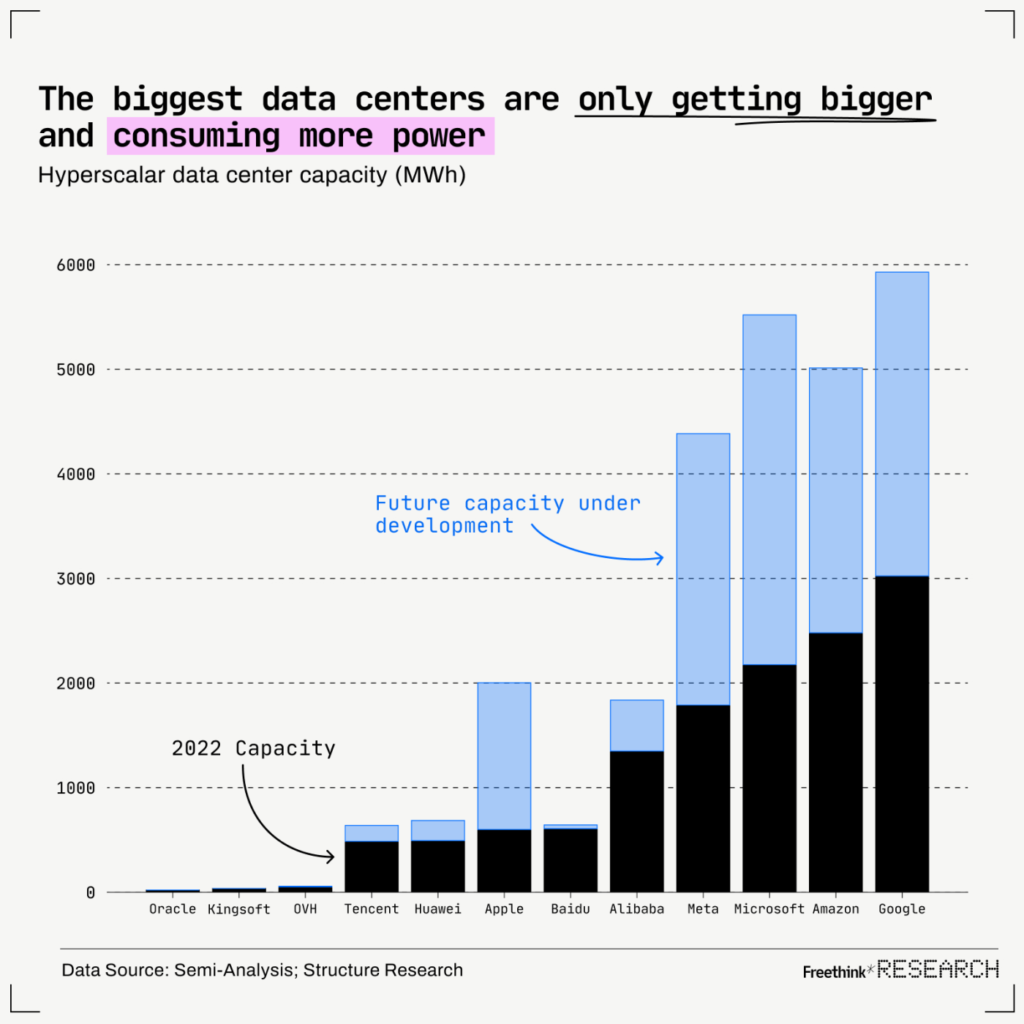

The data center giants are only getting bigger

This final chart shows just how much the biggest companies dominate the data center market. In 2022, Google, Amazon, Microsoft, and Meta were leading the charge, with massive data centers and big plans to grow even more. Meta, in particular, is spending a lot of money to catch up with the top three. Even Apple, known mostly for its consumer hardware, is now investing heavily in data centers, possibly hinting at big moves into AI and the cloud. These tech giants are continuing to build bigger and more powerful data centers, cementing their place as the Goliaths of the market.

Conclusion

As artificial intelligence (AI) continues to reshape industries, the pressure on data centers to handle massive computational power is only growing. This makes finding new ways to create energy-efficient, sustainable systems more critical than ever. Balancing the exploding demand for AI-driven data processing with energy efficiency will be key to ensuring that these systems don’t overwhelm our grids or impose unnecessary environmental costs.

The advances in Power Usage Effectiveness (PUE), the growth of hyperscalers, and coding language optimization show that the industry is making progress, but finding creative new ways to leverage great computing power with less energy will be critical as the AI revolution continues.